The mirage of the super-network

We are living in an increasingly more digital and, therefore, connected world. Through our mobile phones, we can access practically anything, anywhere. Most of the time, information flows without any issues and we tend to ignore occasional slowness and interruptions. Furthermore, the media keeps telling us that the NBN, 5G and other technological advances will make things even faster and more reliable.

Unsurprisingly, this trend encourages software companies to move their applications’ processing online, relying on high-speed networks and big servers installed someplace far away, in a data centre. However, this means that as our dependence on digital connectivity grows, we become increasingly vulnerable to unexpected and uncontrollable events that could make the systems we rely on so heavily unusable.

In a nutshell, all systems dependent on a network and a remote server work only MOST of the time. The ‘most’ part will keep increasing as infrastructure and technology improve, but there will always be some downtime. For some applications, this will never be acceptable.

000 failed in April 2018, why?

While in many cases interruptions don’t matter, in some situations an outage can lead to real disaster. For example, in April 2018 calls to 000 Emergency Services were cut off in most Australian states, due to a system fault in a single location. The outage lasted over 5 hours. Given that 000 receives on average of 870 calls per hour, over 4,000 people couldn’t get urgent medical, fire, disaster or police help. One needs to question the design of this system as it suffered a catastrophic failure after a single event. Why did a failure in NSW end up shutting down services in other states?

The big lesson from the above incident tells us that when designing systems, a specialised architecture must be created if the system requires mission-critical capability.

When considering system architecture, it pays to view systems dependent on communication networks and remote servers as convenience facilities, intended to make our lives easier. This includes mobile phones and the ability to use the internet and the myriad of apps available on mobile devices. We should be prepared for the performance of such systems to occasionally slow down, and even go down. In these instances, the benefits of direct access over the network outweigh the additional cost of providing mission-critical strength.

However, some systems must be designed to withstand failures of hardware, software and human error. The consequences of malfunctions in such systems are both: massive and irreversible. The latter has tremendous significance as the harshness of moral judgement when things go wrong goes up rapidly if the consequences cannot be reversed.

Mission-critical system design

When working on a mission-critical system, it must be designed to operate constantly i.e. continue to provide the intended functionality, despite adverse events in the environment and even within the system.

Such systems must be designed to be as independent as possible. In case of a failure, they may degrade, leading to a reduction in performance and functionality, but in general, mission-critical systems must be engineered to operate, no matter what.

It would be a critical design mistake to be lured by the universal availability of the internet and ever-growing network reliability and speed. A system that needs to be mission-critical must operate on its own, without the network.

Luckily, only some systems within the enterprise must be given mission-critical strength. For example, in a hospital, life support systems must continue running, but the payroll system can go down.

Similarly, only some parts within a retail enterprise demand mission-critical systems. In a declining sequence of criticality, retail systems can be listed as follows:

- Point of sale (POS)

- E-commerce

- Warehouse

- Head office

- Other related systems.

If POS goes down, you lose sales, forever. If a webshop goes down, the customer may try again within the next few minutes, but will sooner or later they will move on if the outage remains unresolved.

A warehouse suffering a system failure becomes a labour sink – the warehouse team is reduced to try to keep busy with housekeeping activities, while they wait for the system to come up. It costs money, but at least the customers are not directly affected.

If the Head Office goes down for a period of time, this generally means a mere annoyance rather than a trading disaster.

Distributed architecture

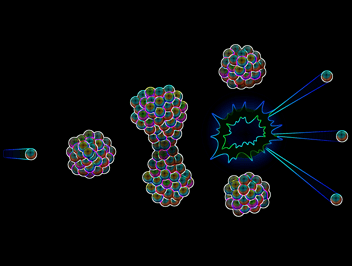

In practical terms, mission-critical systems must be designed as distributed systems, with local processing power and data storage. Access to a network and then to a remote server over the network can be used for data synchronisation (whenever possible), but it must not be needed for transaction processing.

Message queuing systems are commonly used in such environments. Otherwise, if a mission-critical system has any interactive dependency on external elements, it will malfunction sooner or later. If the design flaw (in the form of remote dependency) is not rectified, tons of money can be spent trying to improve the system, to no avail. Like a house built on a shaky foundation, cracks will continue to appear.

In summary, it pays to be aware that no matter how super-capable the internet becomes, it will always leave users vulnerable, as they have no control over what happens beyond their desktop or mobile device.

If a system’s functions make it mission-critical, i.e. lives or serious money depend on it, system architects must make sure that the users have everything needed to run the system locally, or at least in the same building. In our universally connected world, being local sometimes still matters – especially when the stakes are high.